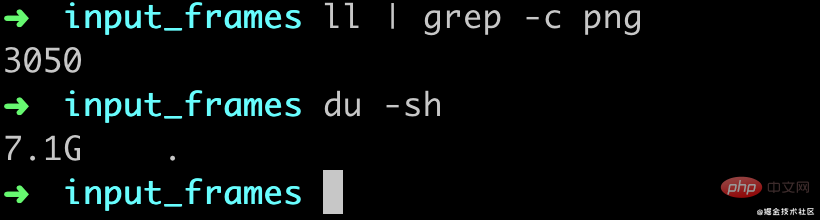

Or maybe it is possible to give ffmpeg a callback so that is returns a numpy array with all the frames already processed ? out, _ = ( I guess what is closer than what i want is the following, but instead of filling the numpy array with the whole video, i want to read one frame at a time. I mean it can, but i want to limit the amount of RAM used by my process. So, i would like to extract one frame at a time to process it, otherwise, it won't fit in memory. The video is around 1Go and each frame is 1M pixels. I cannot use opencv as it does not support 16bits video. In the user code I then can convert it to a numpy array, similarly to the examples you have.I have a video encoded in 16bits. I calculate the framesize based on the output node if given video_size and pix_fmt or if not given, using the fprobe and returning as width * highth * number of channels. Popen( args, stdin = stdin_stream, stdout = stdout_stream, stderr = stderr_stream) PIPE if capture_stderr or quiet else None p = subprocess. PIPE if input else None stdout_stream = subprocess. # calculate framezie framesize = _get_frame_size( stream_spec) """ args = compile( stream_spec, cmd, overwrite_output = overwrite_output) """ Invoke ffmpeg for the supplied node graph, streaming frame by frame. Output_operator( name = 'stream') def stream( stream_spec, cmd = 'ffmpeg', capture_stderr = False, input = None, quiet = False, overwrite_output = False):

0 kommentar(er)

0 kommentar(er)